DeepSeek’s powerful AI models can enhance your applications with natural language processing, generative AI, chatbots and more—all running on AWS Lambda’s serverless architecture for maximum scalability and cost efficiency. Whether you’re building chatbots, automated content generators, or AI-driven workflows, this tutorial will help you deploy DeepSeek on AWS Lambda efficiently.

This article assumes that you are familiar with AWS services like Lambda and API Gateway, etc. and with Generative AI concepts. All code samples in this article can be accessed here: DeepSeek_in_AWS_Lambda

Contents

Prerequisites for DeepSeek AWS Lambda Setup

- An AWS account with permissions to create Lambda functions

- Python installed on your local machine

- A DeepSeek API key and an active subscription (available at DeepSeek’s official website)

- Basic knowledge of AWS Lambda and Python

Step 1: Set up DeepSeek account

Sign-up and login to https://platform.deepseek.com/

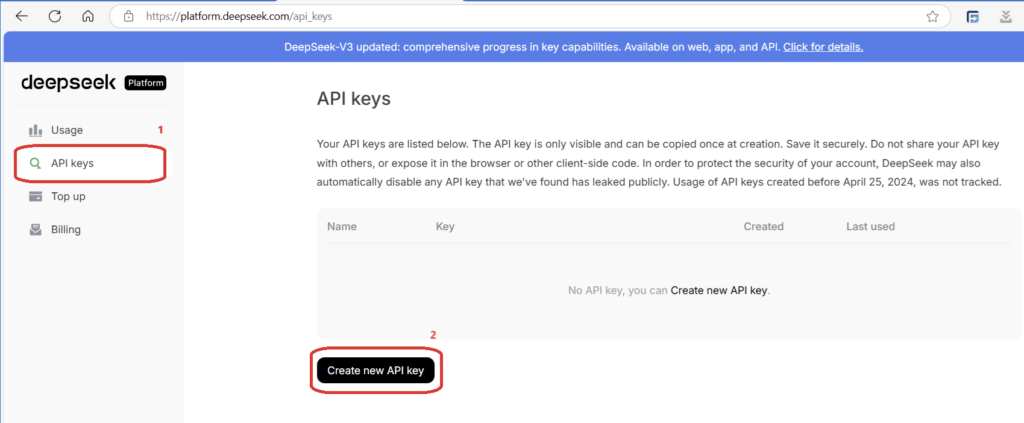

Create a DeepSeek API Access Key

Click on API keys menu on the left and press the ‘Create new API key’ button.

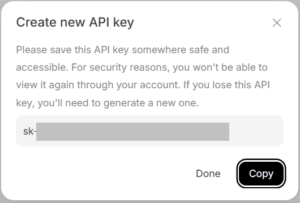

Provide a name for the key and then copy the key in the popup. For security reasons, you won’t be able to view it again. If you lose this API key, you’ll need to generate a new one. So, make sure you keep the key in a safe location.

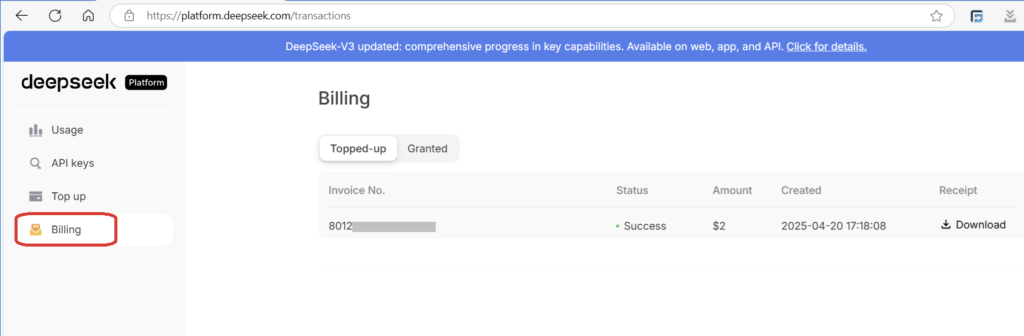

Create an active Subscription

Using DeepSeek from the browser is free to a certain limit. However, I have not been able to use DeepSeek API without a paid subscription. If you know of a way to use DeepSeek API for free, please share it in the comments section so that other readers can benefit from it.

Subscriptions can be created from the billing section:

Check Balance

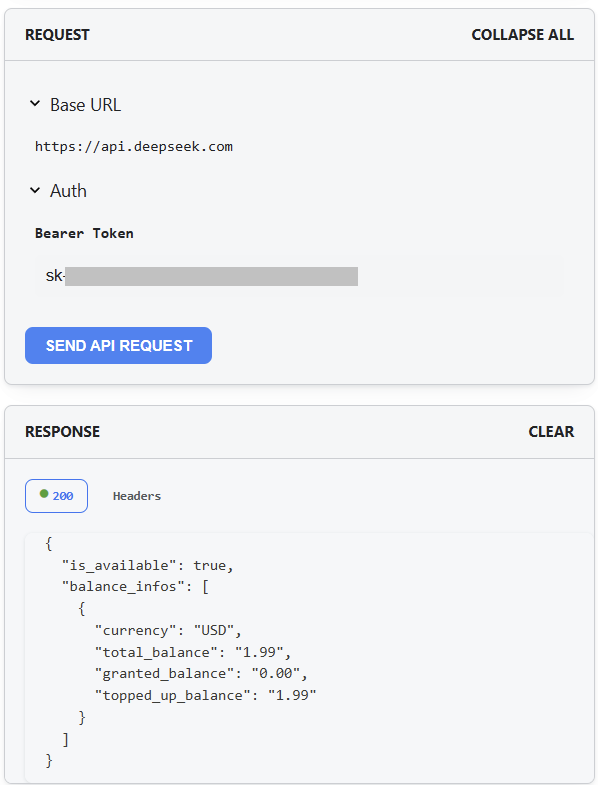

If your APIs return an “Insufficient balance” error, you can check your balance by running the Get User Balance API. Go to Get User Balance page, enter your API key in the Bearer Token field of the Request section on the right side of the page. You should see your balance like this:

Step 2: Create the Lambda Function

- Go to the AWS Lambda Console.

- Click “Create Function” → “Author from scratch”.

- Configure:

- Function Name:

deepseek-ai-handler - Runtime: Python 3.9+

- Architecture: x86_64 or ARM64

- Permissions: Basic Lambda execution role

- Timeout: 30 sec (adjust for long prompts)

- Function Name:

Note that Python libraries are not cross platform compatible and may cause dependency issues during execution. If you are uploading the file from a Windows PC or an Intel Mac, use x86_64 architecture. For Linux/Silicon Mac, use ARM64. The example code provided in this article were created in a Linux environment.

Step 3: Install DeepSeek Python SDK

Method 1: Lambda Layers

You create a Lambda Layer with the DeepSeek library and then use the Layer in the Lambda function. For information on how to do it, please check my article that discussed how to invoke OpenAI APIs from Lambda functions. How to invoke OpenAI APIs from AWS Lambda functions. This article discusses how to package OpenAI Python libraries into a Lambda Layer. You can use the same method to package the DeepSeek Python library into a Lambda Layer. This method is recommended if you’re creating multiple Lambda functions that use DeepSeek library.

Method 2: Local Packaging (used in this article)

Since AWS Lambda doesn’t allow direct pip installs, we’ll package the code and its dependencies locally and upload a ZIP file. In this article, we will use this method.

Create a project folder

mkdir deepseek-lambda && cd deepseek-lambda

Install DeepSeek SDK

pip install deepseek -t ./package

Zip the dependencies

cd package && zip -r ../lambda-package.zip .

Step 4: Create the Python Code

Create a file lambda_function.py with this code:

import os

import json

from deepseek import DeepSeekAPI

def lambda_handler(event, context):

try:

# Explicitly use R1 base model

model_to_use = "deepseek-reasoner"

# Initialize client with the specified model

client = DeepSeekAPI(api_key=os.environ["DEEPSEEK_API_KEY"])

# Parse input (API Gateway or direct invocation)

user_input = (

json.loads(event["body"]).get("query")

if "body" in event

else event.get("query", "Hello!")

)

# Call DeepSeek API (optimized for shorter context)

response = client.chat_completion(

model=model_to_use,

messages=[

{

"role": "system",

"content": "You are a concise assistant. Keep responses under 100 words."

},

{

"role": "user",

"content": user_input[:3000] # R1 has 4K token limit

}

],

temperature=0.5, # Balanced creativity/accuracy

max_tokens=200 # Control costs (R1 is cheaper but less efficient)

)

return {

"statusCode": 200,

"headers": {

"Content-Type": "application/json"},

"body": json.dumps({

"response": response,

"model": model_to_use # For debugging

})

}

except Exception as e:

print(f"R1 Model Error: {str(e)}")

return {

"statusCode": 500,

"body": json.dumps({"error": "Processing failed"})

}Notice how the code extracts the query element from the input JSON body and uses it to create the request for the DeepSeek model. DeepSeek APIs are compatible with OpenAI APIs. So if you already have an application that uses OpenAI, you can use DeepSeek as a drop-in replacement by just changing the library and the model name.

In this example, we use deepseek-r1 model is provides a balanced combination of cost, speed and accuracy. Feel free to play around with temperature, max_tokens and the input prompt. You may experiment with other DeepSeek models listed below. Note that each model has a different input request format and response format, and you may have to change the code to make it work.

Step 5: Deploy the Lambda Function

Add your code to the ZIP file

zip -g lambda-package.zip lambda_function.py

Upload to AWS Lambda

In the Lambda console, go to “Code” → “Upload from” → “.zip file“. Select lambda-package.zip that you created in the previous step.

Configure Environment Variables

- In your Lambda function’s configuration tab

- Navigate to “Environment variables”

- Click “Edit”

- Add a new variable:

- Key:

DEEPSEEK_API_KEY - Value: Your actual DeepSeek API key

- Key:

- Click “Save”

Step 6: Test Your Lambda Function

Configure a Test Event

Go to “Test” → “Configure test event”. Use this JSON:

{

"query": "What is AWS Lambda?"

}Run the Test

Click “Test” and check the response. You should see an output similar to this:

{

"response": "AWS Lambda is a serverless computing service that runs code in response to events...",

"statusCode": 200

}Step 7: Connect to API Gateway (Optional)

The Lambda function that we just created can only be tested locally and integrated with other AWS Services. If you wish to expose the functionality provided by the Lambda function to external world, you can connect it to an API Gateway endpoint and expose it to external apps as a REST Service. The apps can then invoke your API Gateway REST service and utilize the functionalities provided by the Lambda function.

I’ve written an article on how to do this with OpenAI. You can follow the article and adapt it for DeepSeek APIs. How to build a REST API using Amazon API Gateway to invoke OpenAI APIs

Troubleshooting Common Issues

| Error | Solution |

|---|---|

| Timeout Errors | Increase Lambda timeout/memory |

| ModuleNotFoundError | Verify SDK is in deployment package and that it is platform compatible |

| 403 API Key Errors | Check DEEPSEEK_API_KEY env variable |

| High Latency | Use AWS regions closest to DeepSeek’s servers |

AWS Deployment

If you prefer using CloudFormation to deploy your AWS resources, the CloudFormation template for the complete deployment can be found here: deepseek_in_lambda_cf.yaml

To deploy the template, use the following code.

aws cloudformation deploy \ --template-file deepseek_in_lambda_cf.yaml \ --stack-name deepseek-lambda \ --parameter-overrides DeepSeekApiKey=your_api_key_here

DeepSeek Models

Here’s a detailed comparison table of all currently available DeepSeek models, including their capabilities, limitations, and ideal use cases:

DeepSeek Models Comparison (2025)

| Feature | R1 | V3 |

|---|---|---|

| Max Input Size | 4K | 128K |

| Multimodal | ❌ | ✅ (image) |

| Code Support | Basic | Good |

| Math Support | Basic | Advanced |

| Languages | 50+ | 100+ |

| Context Window | 4K tokens | 128K tokens |

| Pricing Tier | Low | High |

| Model ID | deepseek-reasoner | deepseek-chat |

| Use Cases | – Data analysis – Scientific research – Deep logical inference – Analytics and Problem-solving | – Enterprise Chatbots – Customer support automation – Natural Language Understanding – Context-aware communications |

DeepSeek Pricing

As with other platforms like OpenAI, DeepSeek pricing is based on tokens. A token, the smallest unit of text that the model recognizes, can be a word, a number, or even a punctuation mark. Pricing is based on total number of input and output tokens.

For detailed pricing information, check out the official pricing page: Models & Pricing | DeepSeek API Docs

Best Practices

Model Selection

- Start with a general-purpose and cost-effective model like R1 for prototyping, and then change to more specialized models like Coder as needed.

- Use specialized models for maximum accuracy. General purpose models provide very low to average output for specialized tasks.

Security

- Use AWS Secrets Manager for API keys instead of environment variables.

- Restrict Lambda execution with IAM least-privilege policies.

Cost Optimization

- Set Lambda concurrency limits to avoid unexpected DeepSeek API costs.

- Use caching (e.g., Amazon ElastiCache) for repeated queries

- Choose the correct model for the task and keep an eye on

max_tokensparameters to control costs.

Performance

- Increase Lambda memory (improves CPU allocation)

- Use Lambda Layers for shared dependencies

Conclusion

You’ve now built a serverless AI assistant using DeepSeek + AWS Lambda in Python! This setup is ideal for:

- Chatbots

- Content generation

- Data analysis

By integrating DeepSeek’s powerful AI models with AWS Lambda, you can build scalable, serverless AI applications without managing infrastructure. This approach combines the flexibility of DeepSeek’s API with the reliability and scalability of AWS Lambda.

For more advanced use cases, consider exploring DeepSeek’s other models and API endpoints, or adding additional AWS services like DynamoDB for persistent storage of conversation history.

Need help? Drop a comment below!

[…] How to Call DeepSeek APIs in AWS Lambda: Serverless AI Integration Guide […]